CHERI use cases and cost benefit analysis

Author: Nick Allott nick@nqminds.com

Objectives

This document is a living document, which attempts to identify the distinct CHERI use cases and their relative benefits

It has three potentially complementary uses

- Form the basis of an easy to understand description of the options available to a cheri adopter and describe the relative benefits and costs.

- Feeds into an ongoing piece of work “Economics of memory safety+” - which will help define the financial value proposition for silicon vendors and their customers

- Attempt to evaluate the pros and cons of different interventions, in particular compartmentalisation strategies. This becomes particularly important when trying to decide “where” to compartmentalise, as it is a complex multi constraint problem; we need a better framework for understanding/evaluating the constraints

Caveat: some of the concepts are still "work in progress". It needs a rewiew from a wider set ot experts. There are also many uncertiainties taht need resolving

Structure

In the analysis to follow we use three principal dimensions of analysis; the key tradeoffs that need to be considered when electing a strategy.

Performance impact:

- Negative: We assume anything fully CHERI aware has some costs (as yet indeterminate but small %)

- Positive: Swapping out MMU isolation for CHERI compartment isolation could lead to significant performance improvements. Again this is not yet fully determined, but it is useful to describe envelope potential and learnings in progress.

Security impact - how does the vulnerability attack surface change for each - ideally

- Characterisation: what flavour of vulnerabilities does this model protect against

- Examples: some concrete examples of the same (CVE numbers)

- Volume %: some numerical estimate of size of impact (eg. much bandied about 70% for purecap ) [4]

Transformation costs : can we characterise the porting/developer effort to make use of this use case. Transform costs can be further divided:

- Preconditions: what do we assume needs to be in place before we adapt our system to CHERI. This is system wide: a cost which is not attributable to a single system to be ported, but to a whole class of systems. (ie. shared costs)

- Singular system port cost: this is the cost attributable to the singular device or application under consideration, and the developer cost to port the target code to be CHERI compliant

- Reliability cost: this additional cost needed to satisfy the reliability requirement for the target application, in light of the CHERI adaptations. CHERI will cause faults to be raised in hitherto unexpected areas, hence what additional work is needed?

Measuring security impact

How do we measure security impact?

For our current purposes we will use the concept of vulnerability reduction; a reduction in the vulnerability surface. We use this method as it is precisely measurable. An abstract measurement of security impact is hard to calibrate. Talking about attack surfaces is useful for threat modelling and design, but likewise hard to put a number on. A reduction in vulnerabilities (whether current or historical), can be precisely evaluated post intervention

Crudely ΔV: the number of % of vulnerabilities that are rendered unexploitable after a particular intervention

An important distinction to help with later analysis. All vulnerabilities can be broken into two sets. (V = CAMV ∪ CIMV)

- CAMV: cheri addressable memory vulnerabilities. These are vulnerabilities that we know purecap mode will “catch”. (Headline figure <70%)

- CIMV: cheri invisible memory vulnerabilities. Vulnerabilities that cannot be picked up by CHERI. (Headline figure >30%)

Each vulnerability will fall into one set or another. How reliably we can automatically categorise them remains to be seen.

We then have another category to consider

- CCV cheri containable vulnerabilities: These are vulnerabilities that can be contained by a compartmentialsation strategy

We will set later that is is the intersection of CIMV and CCV vulnerabilities,, will be important in determining the security additionality interventions

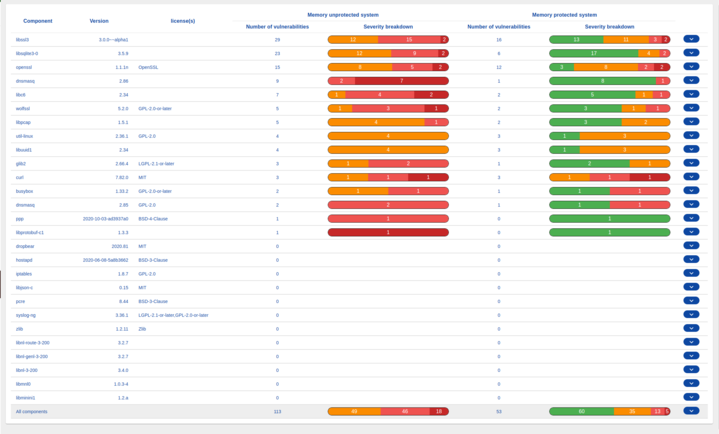

The screenshot below is an example output for a tool (work in progress), which attempts to highlight this vulnerability impact post intervention.

Counting and discriminating vulnerabilities

The question of how you count vulnerabilities, is somewhat nuanced

Some options to consider

- Raw: count all vulnerabilities relevant to this system or component

- Exploitable: only count vulnerabilities where a know exploit exists

- Severity: only count vulnerabilities of a particular CVSS severity score

- Incidence:: only count vulnerabilities, against which incidents have been reported

- Actionable:: only count vulnerabilities, against action is actively planned or taken

There are issues with each measure.

The Microsoft “Security Analysis of CHERI ISA” [4] paper for example uses (e) implicity. Their historical vulnerability analysis relied on: “In 2019, the MSRC classified 655 vulnerabilities as memory safety issues that were rated severe enough to be fixed in a security update” Hence an internal severity rating was used, which presumably was contextual, and factored in the security considerations of the end user application. This measure has many benefits in that it ensures the analysed vulnerabilities reflect the real world economic cost of managing them. However, if we are to have measures that can be compared against systems and markets, however, we need a standardised repeatable methodology.

The second consideration is how do we automatically determine whether CHERI offers sufficient protection to render the vulnerability non-exploitable. Ideally we need a deterministic decision tree that can work this out from data present in the CVE or attached CWE. And if that is not possible, then we need definitive consistent guidance on, what information we need to be able to make this determination.

For CHERI to have real world operational value, this decision method needs to be automated. This may mean making recommendations to augment standard CVE/CWE data.

To make things more complex, it is clear that different CHERI interventions/use cases have different protective properties. The purecap decision method may be tractable. However, mapping the impacts of custom compartments to vulnerability exploitability will be more difficult.

Measuring Transformation Cost

The effort of protecting a system comes in three parts

- Preconditions: sunk cost, absorbed by a broad ecosystem to put the necessary infrastructure in place to exercise the cheri features

- System specific: the effort. The effort the target customer needs to invest in order to benefit from that particular intervention. This may be impacted significantly by component/process type

- Reliability upgrades: the effort need to maintain the desired reliability of the system post CHERI intervention, in light of new signal faults

The numbers for development, vulnerability surface and performance are highly speculative, but to plan and formulate cost benefit trade offs we need estimates.

In this document we will only consider the precondition effort at a high level. A follow up document will explore some of the more nuanced details.

Estimating the effort needed to maintain reliability is complex, and highly market specific. For some markets (target applications) catching and trapping memory vulnerabilities, capable of remote code execution, could be deemed so vital, that the fact that a critical signal fault is raised in its place, is sufficiently valuable, that it is a cost worth bearing.

For other applications swapping an undetected memory vulnerability for a critical fault, could actually be considered highly undesirable.

Hence, to address the problem fully, it needs analysis of both the target market, the current architecture and the proposed compartment boundaries.

To illustrate:

- Placing a component boundary around a threaded system process, with fine grained state persistence, maybe an entirely tractable problem. The component can throw a fault and restart in a similar state. Minimum work is likely in the calling functions.

- Placing a component boundary around a library, called in-process from the client (single or multi threaded), which has little internal state persistence, is likely to require a complete redesign, if we don't want the CHERI fault to create system unreliability .

- Converting the entire stack to CHERI purecap, whilst providing strong assurances against memory vulnerabilities, is likely to create critical exceptions, almost anywhere in the stack. Serious analysis of the reliability implication trade off is needed.

In summary: each CHERI interventions needs carefully analysing in terms of

- Is the tradeoff between memory vulnerability and system exceptions tolerable for the target application

- Does the current architecture in combination with the CHERI usecase lend itself to “usable” recovery, or will it trigger major system redesign

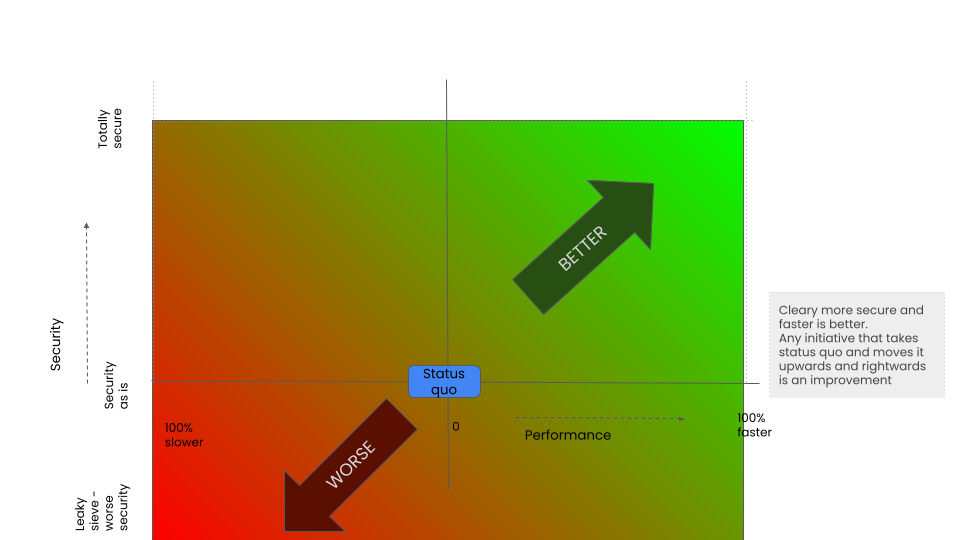

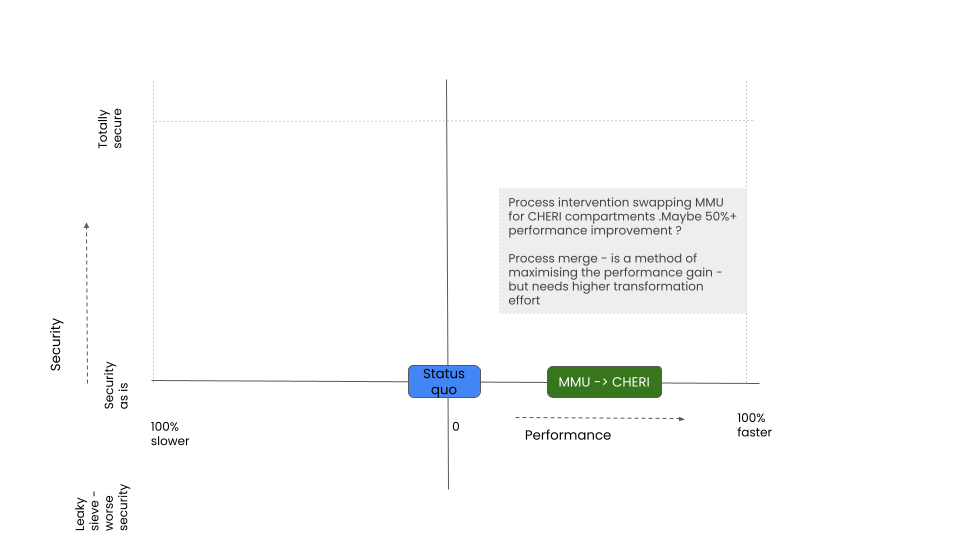

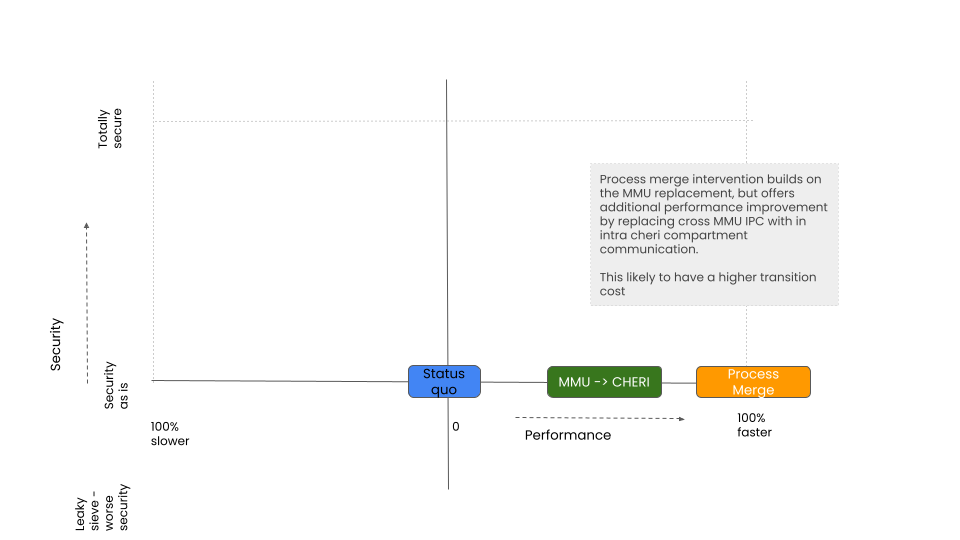

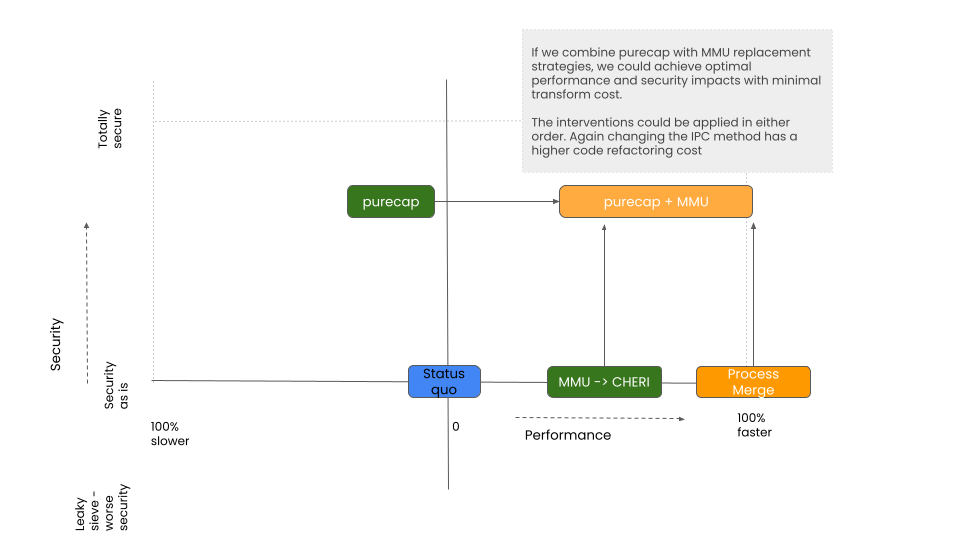

Visualising the intervention impacts

Each intervention is characterised over three dimensions. We will chart over two

- Security impact: on the y axis

- Performance on the x axis

3D charts are hard to visualstion on paper. Transformation cost we will approximate to using colour gradient@ green easy, red hard, orange medium

Links to diagram source:

Its important to note the dimensions used for security and performance are technical, engineering measure only. When we translate this impacts into economic costs both scaling factors and non linearities need to be accommodated, and these factors shift when we look at different markets. For example

- A 10% performance decrease has a different cost for a consumer electronics device to a satellite system

- The economic costs of a 10% performance decrease, 20% and 30% is likely to scale non linearly, but again different by market.

More on this in a follow up document

CHERI use case comparison: the intervention options

Intervention overview

We have tried to list out the distinct high level coarse grained interventions. We have done our best to distil these from the existing documents, some of which are listed at the bottom. It is likely there are some misunderstandings here.

Each listed intervention is distinct in terms of

- What you need to do to make use of it (cost)

- What you get afterwards (benefit)

Crudely, there appear to be four coarse grained categories

- Purecap- fine grained capabilities - mapping to C/C++ artefacts

- Auto Compartments: a suite of compartmentalisation strategies that can hijack either operating systems notions or compiler artefacts. As such they are relatively cheap to implement. But do use pre-existing conceptual boundaries.

- Manual compartments: novel compartments boundaries that can't be auto inherited. These have to be manually added by the developer, so higher cost to design/implement. But these do introduce new boundaries that can be policed

- Performance - Memory Isolation Optimisation strategies: swapping out MMU process isolation for CHERI compartments, for performance optimisation reasons

TODO: The naming of the distinct interventions need correcting /improving

TODO: Some additional thought might be needed to clearly state which interventions can be used in a complementary fashion

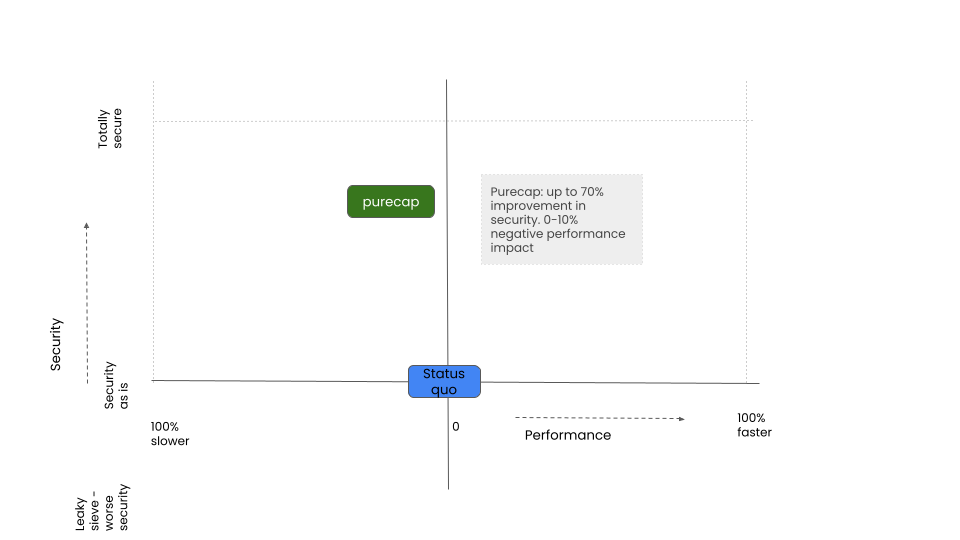

PureCap

Converts all C/C++ code abstraction to use full capabilities

Protects against a large array of memory based vulnerabilities.

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

Effort

Each process must be ported to a purecap version. Includes all link dependencies. Effort can vary widely depending on target process.

The port effort can take three phases

- Compile - produce a binary with no errors

- Run - basic test - does it work - a couple of times

- Test/QA - fully test in order to bring up reliability to the originating

Testing is where all the hard work is. The first two steps are generally quite easy, for most target components.. But the CHERI benefits are not commercially realisable until QA is complete, for that component. .

It should be also noted that the target process code will be/should be defensively written to protect against all common errors. The minute we turn purecap on, we create potential errors from parts of the code where errors were not hitherto expected or predicted by the developer.. Considerable effort is likely to be needed to bring the system up to the original reliability level

It may be possible to reduce some of this effort through containerisation strategies? Examples?

However, when considering containerisation we should be careful to separate the containerization benefits into

- Containerisation necessary to ameliorate the cost of writing “resilience code” to benefit from purecap vulnerability reductions (this should just be added to the development cost of the PureCap proposition)

- Containerisation that offers additional vulnerability reduction benefits over and above purecap

Very rough, finger in the air estimates, presuming good quality QA to commercial grade would be one man month effort per process for basic scenarios. Complex interpreters, compilers with highly abstract and dynamic data structures up to twelve man months ?? Thoughts?? Whats current consensus?? Has anyone targeted commercial grade reliability?? And/or identified patterns for capturing the additional CHERI exceptions at scale

Performance Impact

Decrease performance by 0-10%. This evaluation is still work in progress.

This approach will have a cost compared to the same system running on non CHERI hardware, kernel and un ported. There are no precise figures yet. Will vary by application

Note it may be better going forward to have a performance impact characterised by a table - . Measuring impact, over different process types - CPU heavy vs memory heavy etc.

Security Impact

Remove <70% of vulnerabilities.

Headline figure is to remove 70% of active memory vulnerabilities.

** Currently only spatial on Morello, temporal protection has yet to be added to main branch ??

** There is SBOM tool (work in progress) which attempts to rapidly determine this impact based on a description of the target system (SBOM) But there may be errors in our methodology. Also, vulnerability volume is a crude measure. A better measure it time/effort spent fixing prioritised vulnerabilities. Not sure exactly how google/microsoft came up with the numbers. Important points to clarify.

Summary

| Vulnerabilities | -70%? | |

|---|---|---|

| Performance | +10%? | |

| Dev | Standard | 1MM/Process |

| Compiler /Interpreter | 12MM/Process |

Auto: Thread centric

Uses the OS notion of tasks to isolate tasks from one another using cheri compartmentalisation. This is an OS based automatically induced compartment.

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

- Purcappe target

Effort

The set up of compartments can be added to the OS at the task management level.

Does the relevant binary code need to be purecap ? Presumably yes, as we need the CHERI enhanced ABIs to track the intent of the original developer.

Performance Impact

What are the performance implications? Standard 0-10% range ?

Security Impact

Does this change the vulnerability profile compared to a purecap version of the same system. What vulnerability are avoided additionally through thread based comparmetns?

This question will come up for the other interventionsIf PureCap is a precondition for task base compartmentalisation then we take a CAMV: (cheri addressable memory vulnerabilities.) off the table.(70%) These are already covered. What we have left is CIMV: cheri invisible memory vulnerabilities. The 30%. And of that 30% can we characterise, give examples of or otherwise measure the type of vulnerabilities that a thread based compartment gives us ??Is it fair to assume there is a difference in the vulnerability interventionPurecap - is detect and eject - you have detection detection of the error and exception generated Containerization - is just that. Presumably the relevant issues are not detected within the container. We just get notified when the issue permeates beyond the container boundary Describing precisely what the intersection is (CIMV ∩ CCV) for each compartmentalisation boundary is critical to identifying what is security value proposition is. In other worlds for each compartmentalisation strategy can we list out the vulnerabilities that are not already triggered by purecap but can be contained by this specific boundary.

Summary

| Vulnerabilities | ??% |

|---|---|

| Performance | ??% |

| Dev | ??% |

Auto: Dynamic Library based

Library based compartmentalization can come in two forms

- Static library - the tool chain will have to annotate library compartments at link time

- Dynamic library - the operating system will have to annotate compartments at run time

First we will consider dynamic library base compartmentalisation

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

- PureCap version of target binaries

Effort

Porting: this model assumes purecap porting is already complete. Additional requirement is low - in that the annotation method is a one off process at the load library stage

Adaptation:? What additional porting work is needed to adapt code to this model? Presumably like all methods - more error handling to ensure resilience?

Performance Impact

Standard purecap impacts

Additional overhead through trampoline for compartment switches.

Security Impact

Additional to purecap - some protection against use of global variables?

Anything else ?

Summary

| Vulnerabilities | ??% |

|---|---|

| Performance | ??% |

| Dev | ??% |

Auto: Static Library based

The other option is static library based compartmentalisation. In this instance the compartments need to be annotated by the linker instead of the operating system

Has anyone done this? Is it doable?

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

- PureCap version of target binaries

Effort

Porting: this model assumes purecap porting is already complete. Additional requirement is low - in that the annotation method is a one off process at compilation time.

Adaptation:? What additional porting work is needed to adapt code to this model? Presumably like all methods - more error handling to ensure resilience?

Performance Impact

Standard purecap impacts

?? do you need a trampoline switch for static library model

Security Impact

Presumably the same as dynamic libraries.

Additional to purecap - some protection against use of global variables?

Anything else ?

Summary

| Vulnerabilities | Small% |

|---|---|

| Performance | small% |

| Dev | small% |

Auto: Other possibilities

- File based - compile time

- Class based - compile time

- ??

Manual methods

We have currently determined three coarse grained manual intervention categories

- LegacyABI - Additional Compartments

- ProcessMerge

- Wholesale design

What is missing? What other patterns have been tested or theorised?

The three high level categories are as follows

Manual: LegacyABI - Additional Compartments

In this model, the developer takes an existing system, described by existing process boundaries and existing ABI interfaces.

We assume the target process are shifted to purecap

The developer then adds additional custom compartment boundaries that reflect the problem domain and hence also the security threats particular to this application.

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

- PureCap version of target binaries

Effort

Code needs to be instrumented with the new compartments.

At this stage it is not fully clear to fully understand how the upstream and downstream dependencies work. This model would seem to imply the introduction of an orthogonal depency

- Pre-existing system is described by compiler and runtime dependencies manifest through the ABI

- By introducing non ABI aligned components we potentially introduce a secondary, orthogonal dependency graph, which the developer will need to be cognisant of (at minimum for error handling).

To illustrate

- You develop library B - and add your own compartment model

- Library B is depend on Library C

- Application A users library B

To what extent does application A and library B need to know about the compartments you define in library B.

In other words, when compartment boundaries do not align with programmatic boundaries, does it introduce a different layer of dependency management?

Performance Impact

Unknown ??

Security Impact

The usual question: what security additionality do we get over purecap?

Summary

| Vulnerabilities | ??% |

|---|---|

| Performance | ??% |

| Dev | ??% |

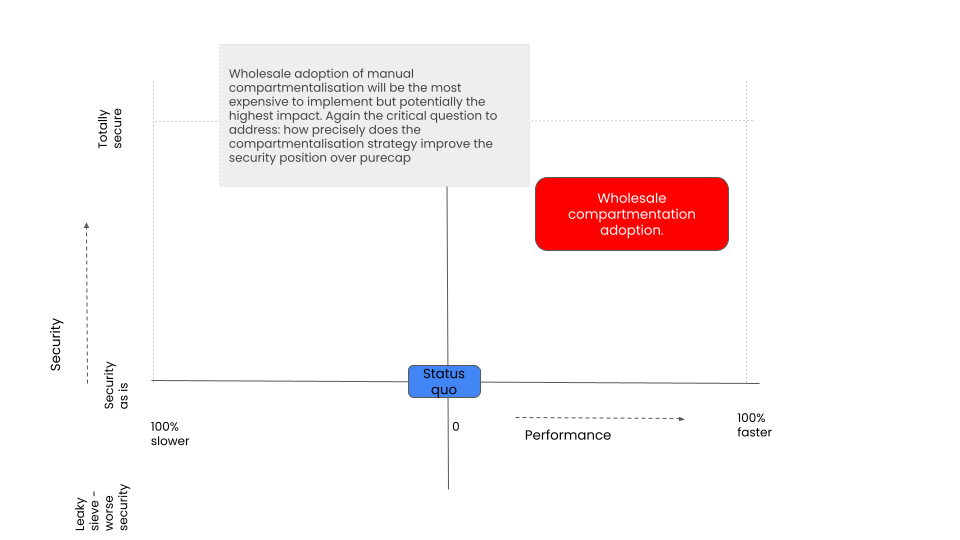

Manual Wholesale

The final intervention requires a fundamental rethink of OS and application boundaries.

It potentially paves the way for a full system that has very high performance characteristics and strong vulnerability protection. Albeit this model does not invite direct component by component vulnerability analysis and the component boundary model changes hugely

This will be expensive to achieve: this is not a porting problem it's a redesign problem

It seems unlikely that this will be a viable option for people attempting to port a legacy C/C++ codebase

It seems likely to fully realise the benefits of this model, the appropriate CHERI semantics (compartments) need to be manifest within the developer language. This might call for a new programming language, or first order primitives being added to an existing one

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

- PureCap version of target binaries

Effort

Huge.

Performance Impact

Huge

Security Impact

Huge

Summary

| Vulnerabilities | high% |

|---|---|

| Performance | high% |

| Dev | high% |

TODO

Perf: Colocation Process centric MMU replacement

This intervention takes a standard process, but runs them within the same memory space (colocated) using CHERI isolation methods instead of standard MMU isolation.

This use case seems very interesting but it is hard to find details.

Some of the work in progress can be traced in these links

https://github.com/CTSRD-CHERI/cheripedia/wiki/Colocation-Tutorial

https://github.com/CTSRD-CHERI/cheribsd/tree/coexecve

https://github.com/CTSRD-CHERI/cheribsd/pull/1659

https://www.cl.cam.ac.uk/research/security//ctsrd/pdfs/20220228-asplos-cheri-tutorial-full.pdf

It would be good to have pointers to a fuller write up on why this might be an interesting model to look at. And what the expected benefits might be.

Preconditions

- CHERI hardware

- CHERI aware kernel

Effort

Low porting requirement. The process does not have to be ported to purecap.

The MMU isolation is swapped out for CHERI compartmentalisation which should be more performant

Is there a link describing how this works precisely ?

Performance Impact

Increase performance. More efficient CHERI compartmentalisation.

Some questions about the performance uplift, is if this process is the only thing running on the system, you will hardly notice anything.??? You will only see the performance increase when other processes (same type or different) - are also using CHERI compartmentalisation. In other words we expect the performance impact to be proportional to the aggregate incidence of process context switches, which are avoided, relative to the process centric model.

Is this right ??

Have any tests been done ??

Security Impact

None

No change in vulnerability profile with respect to legacy MMU process isolated version

Summary

| Vulnerabilities | 0% |

|---|---|

| Performance | +?? (high) |

| Dev | Low |

Perf: Colocation with optimised IPC

In this model, the developer takes two or more existing processes and rearchitects them so they can run in the same memory space, but do using CHERI compartments, thereby making the IPC more efficient.

This picks up the Process MMU replacement intervention, but re-architect legacy code to optimise the interprocess communications.

Preconditions

- CHERI hardware

- CHERI aware kernel

- C/C++ tool chain

- PureCap version of target binaries

Effort

There maybe several ways of doing this

- By hand: changing large parts of the code. RIpping out the IPC methods and replacing with CHERI aware, optimised cross talk

- By making lightweight OS changes . For example is it possible to create an alternative implementation of linux domain sockets that uses CHERI invisible under the hood? And or other IPC methods

The method needs a review of the legacy IPC architecture for the application in question

Performance Impact

Potentially large positive impact

Security Impact

This intervention on its own has no vulnerability/security impact. Unless it can be combined with another strategy

Summary

| Vulnerabilities | none |

|---|---|

| Performance | high% |

| Dev | ??% |

Combined purecap and process merge

The scenarios above are largely considered in isolation.

Some interventions can be layered; first one then the other, or performed at the same time.

Intuitively, it would seem that purecap applied in combination with process-merge, produces an optimal impact with minimal transformation cost.

This however needs testing and fully evidencing

Further information needed

To help inform strategy and commercial decision making an attempt has been made to isolate distinct CHERI interventions, and their relative cost benefits.

To improve this analysis we need to remove as many of the uncertainties as possible.

The first major uncertainty is the classification of the primary use cases themselves: Are they distinct options? Are the dependencies fully understood? Are there any major options missing?

Assuming that the intervention list is close to complete, we then have work to do clarifying the estimates of security impact, performance and transformation cost, if we are to better calibrate the tradeoffs.

What follows is a list of research questions that need to be bottomed out.

Is the list of use cases complete and correct? Have the dependencies been correctly expressed?

What is the qualified measure of security impact for a purecap intervention? Is it 70%?

If we are using “non exploitable vulnerability” as a measure of security impact Are we consistent with our method of counting. (Are vulnerabilities prequalified by severity etc). ?

Can we characterise security additionality of purecap+ compartmentalisation methods (e.g. lib or task based). What additional % of vulnerabilities do we expect these strategies to give us?

Is 0-10% a reasonable performance cost to expect across purecap and compartment strategies? Can we calibrate better?

For the colocation models:

- Can we have pointers to good writeup of the method and the implications

- Can we expect this to be merged into main branch any time soon

- Do we have estimates on the potential positive performance impact, however crude?

- Is it reasonable the partition the performance benefits of multiple "process" colocated and performane increase from IPC as seperate?

Reliability

- Do we have any market research, to understand how different target applications will respond to memory vulnerabilities being shifted into reliability issues. This will presumably vary widely? This is an important question to bottom out - as it could determine where the intervention is viable or not.

- Are there documented design patterns for handling these new errors?

- Specifically are examples of how to use "compartmentalisation" to catch errors as per Assessing the Viability of an OpenSource CHERI Desktop Software Ecosystem (compartmentalisation to mitigate application crash)?

- Do we have estimates of the coding effort required to implement these patterns?

Interim Conclusions

The provisional interim analysis

- Purecap has a strong value proposition for robustifying legacy code. Big impact for little cost if the preconditions are met. We just need data to convince people

- For the majority compartment interventions (compartmentalising existing code) - it is unclear where the security additionality is. We need better documentation on how to characterise the type of vulnerabilities in volume that each intervention prevents, which purecap doesn't give you. (This excludes the model of containing purecap exceptions/crashes - which is just a cost of purecap porting in this analysis)

- But the big so what - seems to be about performance. There are potential huge performance improvements by rethinking OS and application architecture. But this does not seem a likely intervention for legacy code port projects. This is a bottom up re architecture of an OS. So it's a different game to played to a different market over a different timeline

Distillation: there appear to be two distinct value propositions for two different target markets.

- Securing Legacy Code: remove whole classes of memory vulnerabilities from legacy C/C++ codebases, and the implied cost - a CHERI purecap intervention

- Faster more secure compute paradigm: create a complete CHERI aware development stack, which appears more appealing for greenfield development projects. - introducing CHERI compartmentalization concepts into OS, Tool chain and possibly programming language. (CHERI as a security/performance yield curve optimization method.)

An addendum to point 2. It does seem possible that combining purecap strategy with a lightweight MMU-process merge strategy may offer a sweet spot on offering maximum security and performance benefit, with minimal transformation cost. This needs looking into in more detail

Observation: from a purely commercial perspective, performance is an easier sell than security. If we can better evidence the positive performance impacts, it may be worth exploring different commercial focussed messaging around this theme.

The security costs CHERI is trying to fix is already costed into the business, but even minor performance increases (without sacrificing security - and indeed improving it) leads to directly to increased revenue potential.

Feedback on the above categorisation of CHERI interventions and the respective cost benefit analysis, is greatly appreciated.

References

- CHERI compartmentalisation for embedded systems https://www.cl.cam.ac.uk/techreports/UCAM-CL-TR-976.pdf

- CHERI: A Hybrid Capability-System Architecture for Scalable Software Compartmentalization https://ieeexplore.ieee.org/document/7163016

- https://www.cl.cam.ac.uk/research/security/ctsrd/pdfs/2015ccs-soaap.pdf

- [https://github.com/microsoft/MSRC-Security-Research/blob/master/papers/2020/Security%20analysis%20of%20CHERI%20ISA.pdf](https://github.com/microsoft/MSRC-Security-Research/blob/master/papers/2020/Security analysis of CHERI ISA.pdf)

- https://www.capabilitieslimited.co.uk/_files/ugd/f4d681_e0f23245dace466297f20a0dbd22d371.pdf

- https://ctsrd-cheri.github.io/cheri-faq/

Edit Links

Public link: https://specs.manysecured.net/snbd/cheri-use-case

GitHub: https://github.com/TechWorksHub/ManySecured-WGs/tree/main/ManySecured-SNbD

GitHub issues: https://github.com/TechWorksHub/ManySecured-WGs/issues

Google link provided for people wanting to comment but no GitHub access:

https://docs.google.com/document/d/1zZKH31wK2E_s_b2czB_mXo8PKXK7cvQPIP-hG_ZCXeI/edit

email comments: nick@nqminds.com

Note: this is a live document and is built continuously from the github repo.